I'll provide a general overview of how the EU AI Act impacts risk assessment. This will include its risk-based classification system, key requirements for high-risk AI systems, and how organizations need to adapt their risk assessment frameworks to ensure compliance.

I'll share the details with you shortly.

EU AI Act and Risk Assessment: A Risk-Based Approach to AI Governance

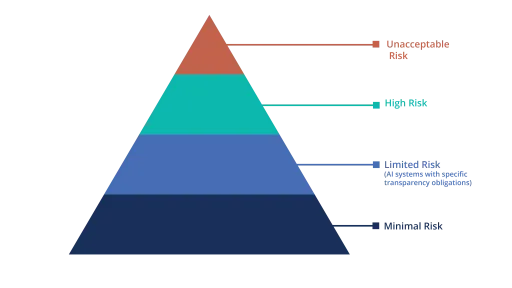

(AI Act | Shaping Europe’s digital future)The EU AI Act employs a risk-based classification model that categorizes AI systems into four tiers of risk (AI Act | Shaping Europe’s digital future). This framework determines which rules and obligations apply to an AI system based on its potential to cause harm. The tiers range from minimal risk (with no new legal requirements) up to unacceptable risk (applications that are banned outright). Below is an overview of each risk category and what it means for AI systems:

- Unacceptable Risk (Prohibited AI) – AI systems that pose a clear threat to safety or fundamental rights are banned under the Act (AI Act | Shaping Europe’s digital future). This includes, for example, tools for social scoring of individuals, AI that manipulates vulnerable people, or certain predictive policing and real-time biometric identification systems (AI Act | Shaping Europe’s digital future) (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland). Such uses are deemed incompatible with EU values (human dignity, democracy, etc.), and the Act prohibits them outright.

- High Risk – AI systems that can significantly impact health, safety, or fundamental rights fall into this category (AI Act | Shaping Europe’s digital future). These are allowed on the market only if they comply with strict regulations. Examples include AI in critical infrastructure (e.g. traffic control safety systems), in education or employment decisions (like exam scoring or CV-sorting tools), credit scoring algorithms, certain medical devices, and law enforcement or border control AI tools (AI Act | Shaping Europe’s digital future) (AI Act | Shaping Europe’s digital future). High-risk AI is subject to extensive requirements (detailed below) to mitigate risks before deployment.

- Limited Risk (Transparency Obligations) – This category covers AI systems that are generally low-risk but may require transparency measures to ensure proper use (AI Act | Shaping Europe’s digital future). In practice, this means users should be informed when they are interacting with AI in cases where it might not be obvious (AI Act | Shaping Europe’s digital future). For example, chatbots or virtual assistants must disclose that they are not human, and generative AI that creates images, text or deepfakes must clearly label its outputs as AI-generated (AI Act | Shaping Europe’s digital future). These measures are meant to preserve user trust and avoid deception, addressing the “transparency risk” of AI.

- Minimal or No Risk – All other AI systems fall into this baseline category. These are applications deemed to pose minimal risk, such as AI-driven spam filters or video game AI (AI Act | Shaping Europe’s digital future). The EU AI Act does not impose new rules on minimal-risk AI, since most AI systems in use today are in this category (AI Act | Shaping Europe’s digital future). They must still comply with existing laws, but the Act itself places no additional requirements on them. (Organizations should nonetheless monitor if an AI’s use or scope changes over time, as it could be reclassified into a higher-risk category if its functionality or context of use evolves (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland) (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland).)

Requirements for High-Risk AI Systems

High-risk AI systems are permitted in the EU, but only under strict conditions. The Act lays out extensive compliance requirements to ensure these systems are trustworthy and safe before they can be put on the market (AI Act | Shaping Europe’s digital future). Providers (developers or those who place the system on the market) bear the heaviest obligations, and deployers (operators who use the AI) have their own duties. Key requirements for high-risk AI include:

- Risk Management: Implement a continuous risk management system throughout the AI’s lifecycle (AI Act | Shaping Europe’s digital future). This means proactively identifying potential risks to health, safety, or fundamental rights that the AI may pose, assessing their likelihood and severity, and taking steps to mitigate or eliminate those risks. Risk assessment isn’t a one-time exercise – it must be ongoing, with regular review and updates as the AI is developed, tested, deployed, and maintained (Article 9: Risk Management System | EU Artificial Intelligence Act).

- Data Governance: Ensure the quality of datasets used to train and test the AI (AI Act | Shaping Europe’s digital future). Training data should be relevant, representative, and free of errors as far as possible to minimize biased or discriminatory outcomes (AI Act | Shaping Europe’s digital future). The Act expects providers to manage data provenance and biases – for instance, by documenting data sources and processing and by applying techniques to mitigate unfair bias (What is the EU AI Act? | IBM). High-risk AI should be built on data that meets specific quality criteria to support accuracy and safety.

- Technical Documentation & Record-Keeping: Prepare and maintain thorough technical documentation for the AI system (AI Act | Shaping Europe’s digital future). This documentation must cover the system’s design, intended purpose, performance characteristics, and compliance measures. It should contain all information needed for authorities to assess the system’s compliance with the Act (AI Act | Shaping Europe’s digital future). Providers must also enable traceability by logging the AI’s operation (automatically recording its outputs or decisions) so that its functioning can be audited (AI Act | Shaping Europe’s digital future). In short, organizations need to document how the AI was developed, how it works, its limitations, and the steps taken to address risks (What is the EU AI Act? | IBM).

- Transparency and User Information: Offer clear information and instructions to users or deployers about the AI system’s capabilities, intended use, and limitations (AI Act | Shaping Europe’s digital future). High-risk AI shouldn’t be a black box to its operators. For example, if you provide a high-risk AI to a client (deployer), you must give them information on how to properly use the system, what not to do, and how to interpret the outputs safely (AI Act | Shaping Europe’s digital future). Certain AI must also inform individuals that they are interacting with a machine when relevant (overlap with the limited-risk transparency rules above).

- Human Oversight: Design the system to allow for appropriate human oversight (AI Act | Shaping Europe’s digital future). This could mean having humans in the loop to monitor the AI’s decisions or intervene if something goes wrong. The Act requires that high-risk AI be controllable – for instance, by enabling a human operator to override or stop the AI if it starts to act outside of its intended bounds (AI Act | Shaping Europe’s digital future). The goal is to prevent a high-risk system from operating unchecked or causing harm without the possibility of human intervention.

- Robustness, Accuracy, and Cybersecurity: Ensure the AI system is robust and secure in its performance (AI Act | Shaping Europe’s digital future). High-risk AI should be designed to minimize errors or failures, and it must withstand attempts to tamper with it or cyberattacks (AI Act | Shaping Europe’s digital future). Providers need to test and validate that the system meets appropriate accuracy levels and will behave reliably under normal conditions and reasonable misuse. Inaccurate or unstable AI in a high-risk context (like a medical diagnostic tool or an autonomous driving system) could have serious consequences, so the Act sets a high bar for technical reliability.

- Quality Management and Monitoring: In addition to the above, providers of high-risk AI must implement an internal quality management system (QMS) and a post-market monitoring plan (What is the EU AI Act? | IBM). A QMS is a set of processes to ensure consistent development practices and compliance (similar to what is required in medical device or product safety regulations). Providers have to actively monitor the AI system once it’s in use, collecting data on its performance and any incidents or malfunctions (What is the EU AI Act? | IBM). If new risks are discovered in the field, the provider should take action (e.g. software updates or even recall the system if needed). This ongoing monitoring ties back into updating the risk management process continuously.

Compliance with these requirements is mandatory for high-risk AI systems. Before such a system can be sold or deployed, the provider will likely need to undergo a conformity assessment to verify it meets the EU AI Act’s standards (similar to a certification process). Once compliant, the AI system will carry a CE marking or be entered into an EU database of high-risk AI. Failure to meet these obligations can result in the AI system being barred from the EU market and other penalties. (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland)

Aligning Risk Management and Compliance Processes

Because of the Act’s rigorous demands, organizations will need to adjust their internal processes to align with the EU AI Act. The regulation isn’t just about product requirements – it also pushes companies to enhance their governance around AI. Key adjustments for organizations include:

- Integrate AI into Enterprise Risk Management: Treat AI risks as part of your overall risk and compliance framework, not in isolation. The EU AI Act’s approach means companies must integrate AI risk management into their existing governance structures (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland). For example, if your company has risk assessment procedures or an internal control system (like those used for safety or data privacy compliance), you should extend it to cover AI systems. This integration ensures that AI projects are continuously monitored and that they don’t “slip through the cracks” of oversight. It also helps prevent an AI system from inadvertently drifting into a higher risk category without the organization noticing (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland).

- Inventory and Classification of AI Systems: A practical first step is to identify all AI systems your organization uses or plans to use, and determine which risk category each falls under (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland). Creating an up-to-date AI inventory is crucial. For each AI application, ask: Is it a high-risk use case as defined by the Act (Annex III and related laws)? Is it a limited-risk application that needs a transparency label? Or is it minimal-risk with no action needed (beyond normal good practice)? Documenting this categorization will tell you where to focus your compliance efforts.

- Gap Assessment and Process Updates: Once you know your AI systems’ risk levels, perform a gap analysis against the Act’s requirements (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland). Compare your current development processes, data management, and oversight practices with what the law will require. For instance, if you have a high-risk AI system, do you already have a formal risk management process for it? Are you documenting the design and keeping logs? Identify any missing controls or documentation. Then, update your processes or policies to fill those gaps. This may involve creating new standard operating procedures for AI development, updating data handling policies to meet the Act’s data quality standards, or establishing new review checkpoints (e.g. an ethics or compliance review before an AI tool is deployed).

- Strengthen Documentation and Record-Keeping: Organizations should reinforce their documentation practices for AI. Technical documentation will not be just an internal artifact; it could be requested by regulators to prove compliance. Businesses may need to invest in tools or frameworks to document AI systems’ design, training data, testing results, and risk assessments in a structured way. Likewise, ensure that your AI systems can log key events and decisions, and that these logs are stored securely for the required retention period (the Act mandates that logs be kept as long as the system is in use, and deployers of high-risk AI must maintain these logs under their control) (What is the EU AI Act? | IBM). This might require updates to IT systems or deploying new monitoring software.

- Assign Roles and Accountability: Aligning with the Act may require clearly assigning responsibility for AI compliance within your organization. You might designate an “AI compliance officer” or expand the role of your data protection officer or risk officer to cover AI governance. The Act introduces the concept of an AI Compliance function (and even a new European AI Office for oversight at the EU level), emphasizing oversight and accountability (The European AI Act: Requirements for High-Risk AI Systems | Emergo by UL). Internally, ensure that someone is accountable for each high-risk AI system’s compliance – from development to post-market monitoring. Also, train your staff (engineers, product managers, legal, etc.) on the new requirements so that compliance is built into the AI development lifecycle, much like privacy by design under GDPR.

- Prepare for Conformity Assessment and Reporting: For high-risk AI, be ready to undergo assessments (either self-assessment or third-party, depending on the case) before putting the system on the EU market. This might mean adopting certain standards or best practices (the Act references harmonized standards for AI). Also set up channels to report serious incidents or malfunctions of high-risk AI to authorities (post-market surveillance obligations), similar to how medical device companies report adverse events. In essence, companies should treat some AI systems with the same rigor as they treat product safety and regulatory compliance in other domains.

By taking these steps, organizations can build AI risk management into their culture, rather than seeing the Act as just a one-time checklist. In the long run, these practices not only ensure legal compliance but also reduce the chance of AI failures and build trust with users and regulators.

Implications for Businesses and Developers

The EU AI Act has far-reaching implications for companies building or using AI, especially regarding liability, documentation, and accountability:

- Product Liability and Accountability: The Act effectively holds AI providers to a product safety standard – if an AI system is high-risk, the provider is responsible for its safety and compliance. If you fail to meet the required safeguards and your AI causes harm, it could be deemed a defective product or a violation of the law. The EU is also updating its broader liability framework (e.g. the Product Liability Directive and a proposed AI Liability Directive) to make it easier for people harmed by AI to seek compensation (The EU AI Act: What Businesses Need To Know | Insights - Skadden) (Artificial intelligence and liability: Key takeaways from recent EU legislative initiatives | Global law firm | Norton Rose Fulbright). In practice, this means developers and companies deploying AI will face greater accountability: you may need to prove that you followed all required steps (such as risk assessments and testing) if something goes wrong. Maintaining comprehensive documentation and logs, as required by the Act, will be critical not just for compliance but also as evidence to defend your organization in case of investigations or lawsuits.

- Documentation and Record-Keeping Burdens: From a developer/business perspective, the Act introduces significant paperwork and process overhead. High-risk AI providers must produce technical documentation, keep records of performance and compliance, and possibly register their systems in an EU database (The EU AI Act: What Businesses Need To Know | Insights | Skadden, Arps, Slate, Meagher & Flom LLP). Developers will need to budget time and resources for writing documentation, conducting bias testing on data, drafting risk assessment reports, and updating these documents throughout the AI’s lifecycle. This could slow down the development cycle compared to the move-fast-and-break-things approach – but it forces teams to be more rigorous. Companies should view this as building “trust by design”: the documentation and transparency can become a selling point, demonstrating that an AI product was developed responsibly.

- Compliance Costs and Processes: Complying with the Act will incur compliance costs – potentially hiring extra compliance personnel, consulting with legal experts, and implementing new tools for audit and data management. For some businesses, there may be a need to establish an internal AI compliance program similar to how firms have GDPR privacy programs. This includes training developers on the new rules, performing internal audits of AI systems, and keeping up with updates (since the European Commission can adjust the list of high-risk AI uses or issue standards over time (EU AI Act: different risk levels of AI systems - Forvis Mazars - Ireland)). Smaller companies and startups will need to plan for these requirements early if they intend to offer high-risk AI in the EU, or consider partnering with compliance experts. On the upside, having robust risk controls might reduce the chance of AI failures and the associated costs of recalls or reputational damage.

- Liability and Penalties: Non-compliance is met with hefty fines, which raises the stakes for businesses. The AI Act sets fines up to €30 million or 6–7% of global annual turnover (whichever is higher) for the most serious violations, such as deploying prohibited AI systems (The EU AI Act: What Businesses Need To Know | Insights | Skadden, Arps, Slate, Meagher & Flom LLP). Even lesser infringements (like not meeting documentation requirements or transparency obligations) can draw fines up to €20 million or 4% of turnover, similar to GDPR levels (The EU AI Act: What Businesses Need To Know | Insights | Skadden, Arps, Slate, Meagher & Flom LLP). These penalties mean AI compliance is a board-level issue – senior management must pay attention, since a violation could have major financial and reputational consequences. Beyond fines, there’s also the risk of products being banned from market until fixed. Liability extends along the value chain as well: if an importer, distributor, or even a user substantially modifies an AI system or uses it in an unapproved high-risk manner, they can be deemed a provider and held fully responsible for compliance (The EU AI Act: What Businesses Need To Know | Insights | Skadden, Arps, Slate, Meagher & Flom LLP). In short, everyone in the AI supply chain needs to be diligent.

- Enhanced Accountability and Governance: The Act drives a culture of accountability in AI development. Companies will need to instill governance practices like oversight boards or ethics committees for AI, similar to how data privacy or financial risks are governed. There is an expectation of “human accountability” for AI decisions – whether it’s a human on the loop or clear assignment of responsibility for outcomes. Developers should anticipate more scrutiny on how their AI models make decisions (e.g. regulators might ask for an explanation of an algorithm’s decision process as part of an audit). The requirement for human oversight implies that AI shouldn’t operate in a vacuum; organizations should define who monitors the AI’s outputs and how to intervene if needed (AI Act | Shaping Europe’s digital future). All these measures collectively mean that businesses must be much more deliberate in how they design and deploy AI, treating it with the same seriousness as any other regulated product or service.

Broader Impact on AI Governance Worldwide

The EU AI Act is the first comprehensive AI regulation of its kind, and its influence is likely to be global. Much like the EU’s GDPR reshaped global data privacy standards, the AI Act is expected to become a benchmark for AI governance beyond Europe (What is the EU AI Act? | IBM). Countries around the world are closely watching the EU’s approach:

- International Standard-Setting: The Act’s risk-based framework (prohibiting the most harmful AI and regulating high-risk AI) may serve as a template for other governments and international bodies. Already, discussions in the G7 and OECD reflect similar principles of risk-based AI regulation and trustworthy AI. We may see other jurisdictions adopt comparable tiered approaches, or at least align on definitions of high-risk AI uses (for example, Canada’s proposed AI law and some US legislative proposals echo risk classifications and transparency requirements).

- “Brussels Effect” on AI Development: Global companies that operate in the EU will likely implement the Act’s standards across all their operations for consistency, a phenomenon known as the Brussels Effect. This means even users outside the EU could indirectly benefit from the Act’s protections as companies raise their AI practices to the highest common standard. Developers might choose to build compliance (e.g. transparency features, logging, bias mitigation) into their AI systems from the ground up, rather than creating one version for Europe and another for elsewhere. Over time, this could lead to a convergence of AI norms, where EU’s stringent requirements elevate industry best practices worldwide (What is the EU AI Act? | IBM).

- Prompting Global Regulation and Dialogue: The enactment of the EU AI Act has spurred conversations in other countries about how to regulate AI. It adds urgency for policymakers in the US, UK, and Asia to consider their own AI governance frameworks to avoid a regulatory vacuum. For instance, the United Kingdom has published a AI governance white paper (taking a lighter, principles-based approach initially), and the United States is exploring AI legislation and relying on sectoral approaches and voluntary frameworks. The EU’s move puts pressure on them to at least address high-risk AI scenarios (like AI in hiring or credit) through some regulations or risk management requirements. We are likely moving toward a world where AI systems, especially high-stakes ones, face regulatory oversight in many markets, not just the EU.

- Collaboration and Compliance Complexity: With different jurisdictions developing AI rules, businesses that operate internationally will need to navigate a patchwork of regulations. The EU AI Act might push toward more collaboration on global AI standards to ease compliance – for example, through international standards organizations (ISO/IEC) creating technical standards that fulfill EU requirements and can be adopted elsewhere. In the short term, organizations should keep an eye on regulatory developments in all regions where they deploy AI. The EU Act’s influence means it’s a good baseline for designing robust AI governance internally, even if you’re not EU-based. Adhering to it can position a company as a leader in responsible AI, which is increasingly important for customer trust and brand reputation worldwide.

In summary, the EU AI Act introduces a rigorous risk-based regime for AI. Organizations looking to align with it should start by classifying their AI systems by risk, bolstering risk assessment and mitigation practices for any high-risk AI, and embedding AI oversight into their governance frameworks. By complying with the Act’s transparency, documentation, and safety requirements, businesses not only avoid penalties but also contribute to a safer and more trustworthy AI ecosystem – a step that is likely to become the norm globally (What is the EU AI Act? | IBM). Compliance will require effort and adjustment, but it offers clear takeaways: know your AI risks, control and document your AI systems diligently, and be prepared to stand accountable for how your AI is used. This proactive approach will put organizations in the best position as AI governance becomes an international priority.