Public Perception of AI

Broadly, public awareness and concern about AI have both surged. Surveys show most people expect AI to dramatically affect their lives, but many feel uneasy about it. For example, a 2023 Ipsos/Pew survey found that globally 66% expect “dramatic” AI impacts (up from 60%), yet 52% say they feel nervous about AI (a 13‐point rise). In the US, 52% of adults report being more concerned than excited about AI. Notably, only about one-third believe AI will improve jobs or the economy. At the same time, awareness of generative tools is high: roughly 63% of survey respondents had heard of ChatGPT and about half of those use it weekly. Sentiment swings have been reported: in a global YouGov poll 32% said their opinion of generative AI had improved over the past year (versus 22% more negative).

Common public concerns – job loss, privacy, misinformation and bias – remain strong. Over half of Americans worry that AI will harm news or politics (e.g. 58% fear election interference, 53% fear misinformation). About 41% say AI does more harm than good protecting personal data. Meanwhile 55–61% of people want more personal control over AI and tighter regulations. Demographically, younger and more educated respondents tend to be more optimistic about AI’s benefits, whereas older or less-educated groups are more skeptical. In short, by mid-2025 public opinion is a mix of fascination and anxiety: high awareness and usage (especially of chatbots and image generators) coexist with persistent worries about risks and the need for regulation.

Media Coverage of AI

News media attention to AI has exploded. In the past five years, the volume of AI stories (and Google searches for “AI”) jumped roughly tenfold. High-profile product releases (e.g. ChatGPT in late 2022, image generators, new LLMs) drove peaks of coverage. Analysis of global media indicates coverage has often been industry-driven and optimism‐biased. One study found that across major outlets, articles frequently frame AI in positive economic terms (productivity gains, innovation) with industry voices dominant. Indeed, many headlines highlight breakthroughs and business opportunities. That said, balanced reporting is emerging: a 2024 analysis found that positive vs negative sentiment in AI news headlines is roughly equal overall. In practice, a given outlet may oscillate between hype and caution – covering impressive use cases one day and warning of “killer robots,” bias or surveillance the next.

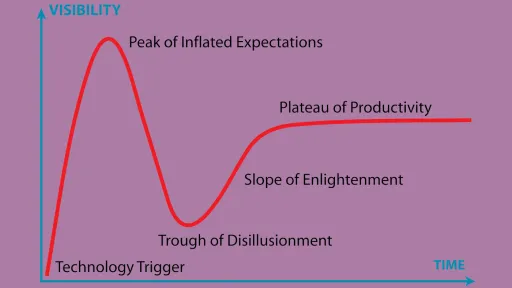

Coverage in 2024–2025 has broadened from pure hype to include risks and regulation. Major outlets increasingly report on issues like deepfakes, privacy breaches, and the need for AI governance. For example, articles have detailed political disinformation campaigns with AI, workplace privacy debates, and ethical scandals. Lawmakers’ responses have featured prominently: media have extensively covered the EU’s new AI Act, proposed U.S. AI regulations, and corporate commitments to safe AI. In sum, AI stories remain a staple of tech and business media, but their tone is shifting from uncritical enthusiasm toward a more mixed narrative. Gartner analysts explicitly place generative AI at the “Trough of Disillusionment” in the Hype Cycle, noting that organizations are hitting limits of experimentation. Even as coverage volume remains high, many stories now question hype and focus on real-world challenges and guardrails. (For context, Gartner projects that generative AI spending will keep soaring – to ~$644 billion in 2025 – even as peak hype cools.)

Technological Capabilities

-

Generative AI & NLP: Large language and multi-modal models continue to advance. OpenAI’s GPT-4.5 (early 2025) is described as the “largest and best model for chat” to date, with smoother conversational skills and fewer hallucinations. GPT-4.5 can better follow user intent and draw on a broader knowledge base (OpenAI reports it “hallucinates less” than GPT-4). Tech analysts note GPT-4.5 enhances “natural, fluid, humanlike” interaction and emotional intelligence. Competing models have also leapt forward: Anthropic’s Claude 3 family (Haiku, Sonnet, Opus, announced Mar 2024) set new industry benchmarks, with Claude 3 Opus “exhibiting near-human levels of comprehension and fluency on complex tasks” across reasoning and knowledge tests. Google’s Gemini 1.5 (early 2024) similarly improved efficiency and context length: the mid-sized “1.5 Pro” model matches the performance of the previous largest model while using much less compute. Crucially, Gemini 1.5 introduced a massive context window (standard 128K tokens, with private previews up to 1 million tokens) – an order of magnitude larger than prior models. These advances mean multi-hour documents and complex dialogues can be handled more effectively.

However, limitations remain. All current LLMs still occasionally make factual errors or biased inferences. Despite hardware gains, fully reliable common-sense reasoning is still elusive. OpenAI itself emphasizes GPT-4.5 is a research preview while it explores strengths and limitations. In other NLP domains, dedicated models shine: for instance, Google’s pathways and multilingual models now support many languages robustly. AI’s sheer compute hunger is another issue: analysts note that ~80% of enterprise generative AI spending is on hardware (servers and devices) rather than software, reflecting the intense resource demands of these models.

- Computer Vision & Multimodal: Vision capabilities have also advanced via large models. Generative image models are now impressively reliable. OpenAI’s latest image-generation engine (introduced Mar 2025) can accurately render detailed scenes and even legible text within images. It is powered by the GPT-4o model family, which leverages GPT-4’s multimodal architecture. In practice, this meant over 130 million users created 700 million images in ChatGPT’s first week of offering image generation, suggesting the technology is both powerful and popular. Beyond generation, vision understanding is mixed. Multimodal LLMs like GPT-4/GPT-4.5 can answer questions about images, do OCR, and reason about pictures. For example, GPT-4.1 successfully handled OCR and visual Q&A tests in independent benchmarks, correctly reading text from a photo. However, fine-grained tasks remain hard: GPT-4.1 failed to precisely localize an object (a dog) in an image, illustrating that current vision models still struggle with exact object detection. Core computer-vision systems (e.g. CLIP, Meta’s Segment Anything) now form standard building blocks for image recognition and segmentation. These tools enable labeling and analysis of images at scale, but real-world deployment (e.g. autonomous car vision) is still maturing. Multimodal models are also rising: many new systems combine text, vision, and even audio. LLMs can ingest text+image prompts, and some emerging research models accept video or 3D inputs. Nevertheless, truly reliable video understanding and robotics perception remain in early stages.

- Robotics & Autonomous Systems: In robotics, AI is making incremental progress toward practical deployments. In 2025 we see the first commercial trials of humanoid robots: for instance, Boston Dynamics plans to deploy its latest Atlas robot in a Hyundai factory to handle heavy lifting. Similarly, Agility Robotics’ bipedal robot Digit has begun moving inventory in a warehouse, and Figure’s humanoid robot reached early commercial customers. These represent a shift from labs to limited real‐world use. However, nearly all deployed robots remain specialized: wheeled vehicles for transport, robotic arms for assembly, etc. Fully autonomous robots (especially general-purpose humanoids) are still years away from broad rollout, due to challenges in perception, dexterity, and safety. Autonomous vehicles illustrate this: while advanced driver-assist features are common, no car is yet fully self-driving at scale. On the positive side, AI-driven automation is clearly improving worker productivity. A recent PwC analysis found that industries most exposed to AI have seen 3–4× higher productivity growth, and wages in those sectors are rising twice as fast as elsewhere. This suggests that in practice AI is currently amplifying human workers’ value more than simply replacing them.

Enterprise AI Adoption

Business adoption is booming, but uneven. By early 2024, major surveys found that roughly 65% of companies were regularly using generative AI – about double the rate from late 2023 – and overall 72% were using some form of AI. Spending is rising explosively: Gartner projects global generative AI expenditure of about $644 billion in 2025 (a 76% YoY jump). Much of that money goes into infrastructure: according to Gartner, ~80% of GenAI budgets in 2025 will be hardware (PCs, servers, devices).

Certain sectors lead the charge. Financial services (banking, fintech) and technology/software are often cited as having the highest AI maturity. BCG identifies fintech, software, and banking as having the highest concentration of “AI leaders”, likely because these industries can quickly monetize AI in products and processes. Healthcare and life sciences are also investing heavily (e.g. drug discovery using AI), as are retail and manufacturing (for supply-chain optimization and smart automation). In general, firms are experimenting with AI across the enterprise: McKinsey found many companies now deploy AI in two or more functions (a sharp rise from a year earlier). Common use-cases include customer-service chatbots, AI‐assisted coding/analytics, personalized marketing content, and automated risk and compliance review. For example, Deloitte highlights cases where banks use generative AI to triage massive volumes of cybersecurity alerts, and tech companies apply AI to speed up sales workflows.

Reported benefits are significant for some leaders. In Deloitte’s late-2024 survey, 74% of firms said their most advanced GenAI initiatives met or exceeded ROI expectations (with 20% reporting >30% ROI). AI-driven projects have delivered both cost savings and new revenue streams. BCG notes that high-performing “AI leader” companies have seen ~1.5× higher revenue growth and shareholder returns than peers.

Yet many organizations struggle to translate pilots into value. Only about 26% of companies have scaled AI beyond proofs-of-concept and generated tangible value, per BCG. Common barriers include data quality, integration with legacy systems, and the need for talent and training. Indeed, surveys report that nearly all execs plan to keep ramping up AI investment (92% in one McKinsey report), but only ~1% feel “AI-mature” today. Internal alignment can be rocky: a 2024 study found less than half of employees felt their company’s AI rollout was successful, and over half of workers admitted hiding their AI usage for fear of negative repercussions.

Major challenges loom. Cost and infrastructure remain huge factors: deploying large models requires powerful servers and energy, driving up budgets (as noted, hardware dominates spending). Governance and regulation are top concerns – companies are scrambling to comply with new laws (EU’s AI Act, industry standards, data privacy rules) and to establish internal oversight of AI risk. Workforce impact is also in focus: while some evidence suggests AI augments skilled workers (raising wages), there is fear and uncertainty among many employees.

In summary, by mid-2025 AI is everywhere in business planning and remains at or near its hype-peak in investment terms, but real-world deployment is uneven. Some firms report clear ROI and roll out useful AI tools, while many are still navigating governance, skill gaps, and technological limits. Gartner’s analysis reflects this mixed picture: generative AI is in the “Trough of Disillusionment” on the Hype Cycle even as spending and experimentation continue to grow.

Sources: Industry analyst reports and surveys from McKinsey, Gartner, BCG, Deloitte, Pew, and technology outlets (among others) were used to assess public sentiment, media trends, technology benchmarks, and enterprise adoption. These reports reflect data up through early 2025.